The Making of the First Digital Computer

In a scene from the 2014 movie The Imitation Game, the brilliant Alan Turing is urged by his colleague at Bletchley Park, Joan Clarke (played by Keira Knightley), to be more of a team player. Turing reluctantly agrees to try, and the next scene shows Turing stiffly sharing a bag of apples with his colleagues at Bletchley. This scene underlines one of the themes of this powerful movie: Turing was a genius, but the men and women assembled at Bletchley Park were ultimately successful at breaking German code when they worked together and collaborated as a team. Walter Isaacson makes a similar point in his 2014 book, The Innovators-How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution. The history of technology is full of exceptional people who made important contributions working alone, but equally significant are the stories of group work and partnerships.

Isaacson suggests that the modern computer can trace its history back to team efforts as much as individual ones, and to small incremental steps as much as major breakthroughs. Different people at different points in time and at distinct geographic points around the world contributed bits and pieces. From the ideas of the eccentric Alan Turing to the work of all-scientist teams at Bell Laboratories, the computer evolved. Over time, cruder mechanical parts gave way to electronic components, all of which enhanced computing power, programmability and speed. Now microchips are a fraction of the size of pinheads, supercomputers perform quadrillions of calculations per second and pioneering work in quantum computing propels us forward yet again. But, where did it all begin?

An Early Blueprint: The Analog Computer

One of the earliest forms of computing took the form of an analog device, which is basically a machine built to mimic or replicate on a smaller scale the physical phenomenon that is under investigation. For example, in the late 1800s a tide-predicting machine was invented by Sir William Thomson. By using a simple set of pulleys and wires, the invention could project tide levels at a particular time and place. The first analog machines were adept at solving differential equations but were limited in the sense that the machine could solve for only one variable at a time. Vanevar Bush at MIT improved on this and developed a method for integrating several variables in what he called a Differential Analyzer, a machine with both electrical motors and mechanical gears and pulleys. Although the Analyzer was a real advance in terms of improving the analog computer, it had definite limitations.

Binary Systems and Computers Merge

Claude Shannon, inspired by his work assisting MIT’s Bush on the Differential Analyzer, went on to work at Bell Laboratories. At Bell, Shannon explored the possibility of using a simple relay of phone circuits, or on and off switches, to solve problems in logic using a binary system of computation. He reasoned that the electromechanical relays used to route phone calls could perform Boolean algebra with the use of “logic gates” and ultimately solve complex math. These ideas would be useful to Alan Turing as he considered using machines to solve a range of mathematical problems. Turing had recently completed his paper on the Logical Computing Machine, a machine that he envisioned could perform any mathematical computation with a binary system of logic. The mathematician George Stibitz, with the help of a multi-disciplinary team at Bell Laboratories, went further with Shannon’s ideas and in 1939 assembled the first machine using 400 electromagnetic relays, the Complex Number Calculator. A machine with this number of relays could march through logical sequences and complex math with astonishing consistency. The computing machine continued to evolve as it borrowed ideas and technologies from other systems and industries. The general utility of the basic computing machine gradually began to improve.

The Limitations of a Binary System and a Switch to a 19th Century Model

A binary system could only be so useful. The mathematician Howard Aiken lobbied Harvard University to build a computer based on Charles Babbage’s 19th century blueprint for the Difference Engine. The Harvard Mark I was completed around 1944 and, although an intimidating 80 feet long and 50 feet wide, was a formidable machine able to compute large numbers of up to 23 digits. Many of its working parts were still mechanical, however, so it was slower to compute. The machine worked independently and once programmed, could automatically work through a series of calculations. Another milestone had been achieved in the long trek toward the modern computer.

Computing Speeds Get a Kick with Vacuum Tubes

Another huge development towards the modern computer was the growing use of electronic switches instead of the mechanical ones that had dominated so far. A few engineers in different parts of the world would get to work on this angle: Konrad Zuse in Germany and George Stibitz based at Bell Laboratories. Isaacson gives special attention to John Vincent Atanasoff who toiled away in isolation at his lab at the University of Iowa, ultimately patching together a machine that used vacuum tubes to enhance computing speed. He also invented a way to bolster computing memory with the use of capacitors and a rotating cylinder. Atanasoff’s machine was never completed, but his use of vacuum tubes resulted in computation speeds that exceeded anything developed to date.

Discoveries From Around the World Come Together in One Machine

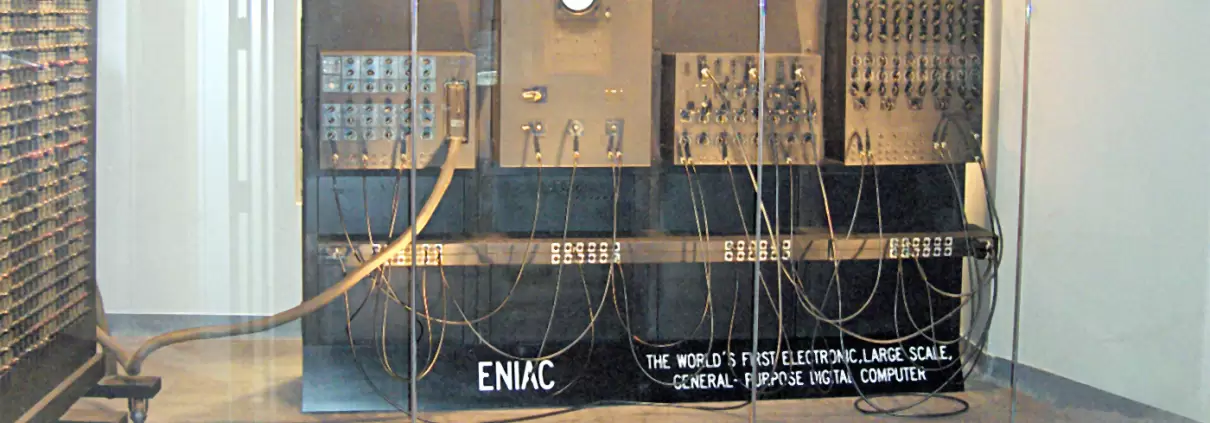

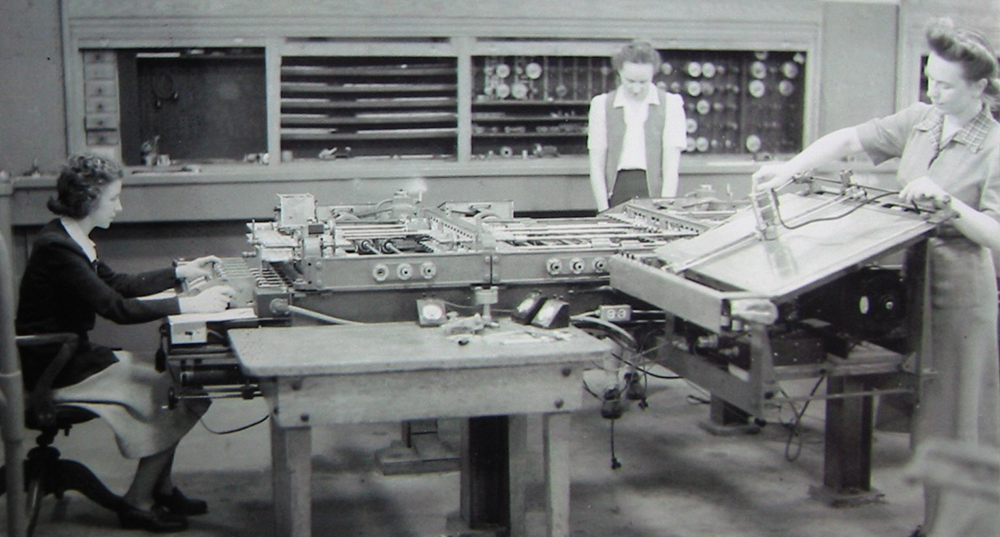

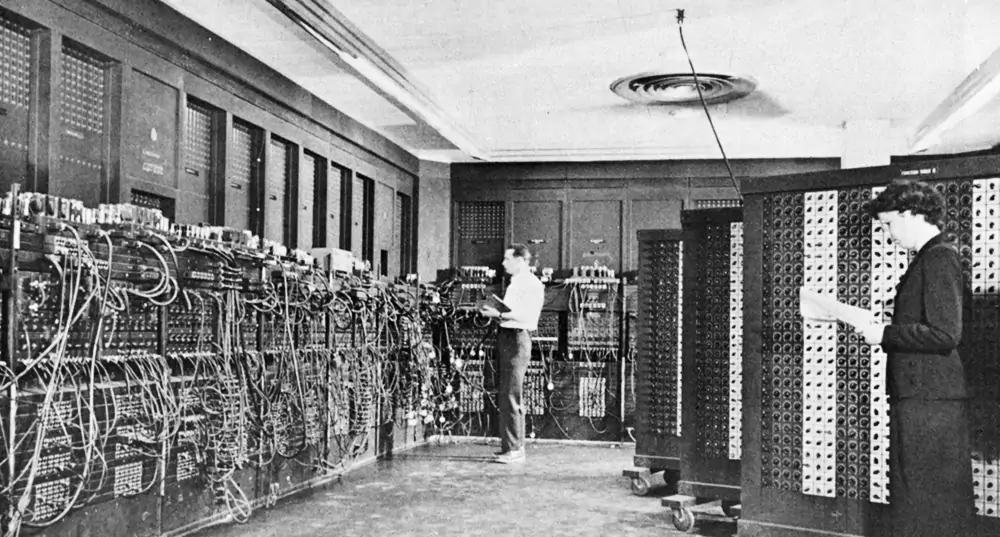

John Mauchly and J. Presper Egert, two talented and enterprising engineers and mathematicians, received funding as part of the war effort to build a machine that incorporated many of the advances in modern computing. The product of this effort was the ENIAC (Electronic Numerical Integrator and Computer), a machine that represented the work of many individuals, working individually and in teams. The machine also represented the culmination of progress made in computing over the last 50 years. It was a digital machine, not binary, and made use of counters that could accommodate 10 digits. Unlike previous machines, it could operate like a modern computer and branch off into multiple subroutines using conditional branching capability.

According to Isaacson, the ENIAC represented the best that had been achieved in computer design up to that point; it was completely electronic, digital and the most efficient calculator to date, spitting out thousands of solutions in seconds.

Isaacson nicely sums up his ideas about the evolution of the digital computer in the following excerpt from his book: “(T)he main lesson to draw from the birth of computers is that innovation is usually a group effort, involving collaboration between visionaries and engineers, and that creativity comes from drawing on many sources. Only in storybooks do inventions come like a thunderbolt, or a lightbulb popping out of the head of a lone individual in a basement or garret or garage.” His statement makes complete sense to me as I consider the on-going group effort needed to keep our engineering software development relevant. The work of a great team is never done.

References

Isaacson, Walter (2014). The Innovators: How a Group of Hackers Geniuses, and Geeks Created the Digital Revolution, New York, New York, Simon & Schuster.